Another week of @dailyshoot:

PS. Check out the updated dailyshoot.com web site.

Monday, December 7, 2009

Sunday, November 29, 2009

Daily Shoot, week 2

As I mentioned last week, I've been following @dailyshoot for a series of daily photo assignments. Here's what I shot this week:

Saturday, November 28, 2009

Sling over HTTP

A few days ago I posted about Jackrabbit, and now it's time to follow up with Sling as a means of accessing a content repository over HTTP. Apache Sling is a web framework based on JCR content repositories like Jackrabbit and among other things it adds some pretty nice ways of accessing manipulating content over HTTP.

The easiest way to get started with Sling is to download the "Sling Standalone Application" from the Sling downloads page. Unpack the distribution package and start the Sling application with "java -jar org.apache.sling.launchpad.app-5-incubator.jar". Like Jackrabbit, Sling can by default be accessed at http://localhost:8080/. There's a 15 minute tutorial that you can check out to learn more about Sling.

Since Sling comes with an embedded Jackrabbit repository, it also supports much of the WebDAV functionality covered in my previous post. Instead of rehashing those points, this post takes a look at the additional HTTP content access features in Sling.

CR1: Create a document

Like with Jackrabbit, all documents in Sling have a path that is used to identify and locate the document. Sling solves the problem of having to come up with the document name by supporting a virtual "star resource" that'll automatically generate a unique name for a new document. Thus instead of having to think of a URL like "http://localhost:8080/hello" in advance, the new document can be created by simply posting to the star resource at "http://localhost:8080/*".

The Sling POST servlet is a pretty versatile tool, and can be used to perform many content manipulation operations using normal HTTP POST requests and the application/x-www-form-urlencoded format used by normal HTML forms. With the POST servlet, the example document can be created like this:

The 201 Created response will contain a Location header that points to the newly created document. In this case the returned URL is "http://localhost:8080/hello_world_" based on some document title heuristics included in Sling. If you run the command again you'll get a different URL since the Sling star resource will automatically avoid overwriting existing content.

Pros:

CR2: Read a document

Sling contains multiple ways of accessing the document content in different renderings. In fact much of the power of Sling comes from the extensive support for rendering underlying content in various different and easily customizable ways.

Unfortunately at least the latest 5-incubator version of the Sling Application doesn't support any reasonable default rendering at the previously returned document URL. The client needs to explicitly know to add a ".json" or ".xml" suffix to the document URL to get a JSON or XML rendering of the document.

The JCR document view format is used for the XML rendering.

Pros:

Cons:

CR3: Update a document

The Sling POST servlet supports also document updates, so we can just POST the updated properties to the document URL:

Pros:

Cons:

CR4: Delete a document

You can either use the special ":operation=delete" feature of the Sling POST servlet or a standard DELETE request to delete a document:

Pros:

Cons:

The easiest way to get started with Sling is to download the "Sling Standalone Application" from the Sling downloads page. Unpack the distribution package and start the Sling application with "java -jar org.apache.sling.launchpad.app-5-incubator.jar". Like Jackrabbit, Sling can by default be accessed at http://localhost:8080/. There's a 15 minute tutorial that you can check out to learn more about Sling.

Since Sling comes with an embedded Jackrabbit repository, it also supports much of the WebDAV functionality covered in my previous post. Instead of rehashing those points, this post takes a look at the additional HTTP content access features in Sling.

CR1: Create a document

Like with Jackrabbit, all documents in Sling have a path that is used to identify and locate the document. Sling solves the problem of having to come up with the document name by supporting a virtual "star resource" that'll automatically generate a unique name for a new document. Thus instead of having to think of a URL like "http://localhost:8080/hello" in advance, the new document can be created by simply posting to the star resource at "http://localhost:8080/*".

The Sling POST servlet is a pretty versatile tool, and can be used to perform many content manipulation operations using normal HTTP POST requests and the application/x-www-form-urlencoded format used by normal HTML forms. With the POST servlet, the example document can be created like this:

$ curl --data 'title=Hello, World!' --data 'date=2009-11-17T12:00:00.000Z' \

--data 'date@TypeHint=Date' --user admin:admin \

http://localhost:8080/*

The 201 Created response will contain a Location header that points to the newly created document. In this case the returned URL is "http://localhost:8080/hello_world_" based on some document title heuristics included in Sling. If you run the command again you'll get a different URL since the Sling star resource will automatically avoid overwriting existing content.

Pros:

- A single standard POST request is enough

- The HTML form format is used for the POST body

- Automatically generated clean and readable document URL

Cons:

- The star resource URL pattern is fixed and creates an unnecessarily tight binding between the client and the server

CR2: Read a document

Sling contains multiple ways of accessing the document content in different renderings. In fact much of the power of Sling comes from the extensive support for rendering underlying content in various different and easily customizable ways.

Unfortunately at least the latest 5-incubator version of the Sling Application doesn't support any reasonable default rendering at the previously returned document URL. The client needs to explicitly know to add a ".json" or ".xml" suffix to the document URL to get a JSON or XML rendering of the document.

$ curl http://localhost:8080/hello_world_.json

{

"title": "Hello, World!",

"date": "Tue Nov 17 2009 12:00:00 GMT+0100",

"jcr:primaryType": "nt:unstructured"

}

$ curl http://localhost:8080/hello_world_.xml

<?xml version="1.0" encoding="UTF-8"?>

<hello_world_ xmlns:fn="http://www.w3.org/2005/xpath-functions"

xmlns:fn_old="http://www.w3.org/2004/10/xpath-functions"

xmlns:xs="http://www.w3.org/2001/XMLSchema"

xmlns:jcr="http://www.jcp.org/jcr/1.0"

xmlns:mix="http://www.jcp.org/jcr/mix/1.0"

xmlns:sv="http://www.jcp.org/jcr/sv/1.0"

xmlns:sling="http://sling.apache.org/jcr/sling/1.0"

xmlns:rep="internal"

xmlns:nt="http://www.jcp.org/jcr/nt/1.0"

jcr:primaryType="nt:unstructured"

date="2009-11-17T12:00:00.000+01:00"

title="Hello, World!"/>

The JCR document view format is used for the XML rendering.

Pros:

- A single GET request is enough

- Both the JSON and XML formats are easy to consume

Cons:

- Simply GETting the document URL doesn't return anything useful

- The ".json" and ".xml" URL patterns create an unnecessary binding between the client and the server

- Neither rendering contains property type information

- The XML rendering contains unnecessary namespace declarations

CR3: Update a document

The Sling POST servlet supports also document updates, so we can just POST the updated properties to the document URL:

$ curl --data 'history=Document date updated' \

--data 'date=2009-11-18T12:00:00.000Z' \

--data 'date@TypeHint=Date' --user admin:admin \

http://localhost:8080/hello_world_

Pros:

- A single standard POST request is enough

- The HTML form format is used for the POST body

Cons:

- None.

CR4: Delete a document

You can either use the special ":operation=delete" feature of the Sling POST servlet or a standard DELETE request to delete a document:

$ curl --data ':operation=delete' --user admin:admin \

http://localhost:8080/hello_world_

$ curl --request DELETE --user admin:admin \

http://localhost:8080/hello_world_

Pros:

- A standard DELETE or POST request is all that's needed

Cons:

- None.

Monday, November 23, 2009

Jackrabbit over HTTP

Last week I posted a simple set of operations that a "RESTful content repository" should support over HTTP. Here's a quick look at how Apache Jackrabbit meets this challenge.

To get started I first downloaded the standalone jar file from the Jackrabbit downloads page, and started it with "java -jar jackrabbit-standalone-1.6.0.jar". This is a quick and easy way to get a Jackrabbit repository up and running. Just point your browser to http://localhost:8080/ to check that the repository is there.

Jackrabbit comes with a built-in advanced WebDAV feature that gives you pretty good control over your content. The root URL for the default workspace is http://localhost:8080/server/default/jcr:root/ and by default Jackrabbit grants full write access if you specify any username and password.

Note that Jackrabbit also has another, filesystem-oriented WebDAV feature that you can access at http://localhost:8080/repository/default/. This entry point is great for dealing with simple things like normal files and folders, but for more fine-grained content you'll want to use the advanced WebDAV feature as outlined below.

CR1: Create a document

All documents (nodes) in Jackrabbit have a pathname just like files in a normal file system. Thus to create a new document, we first need to come up with a name and a location for it. Let's call the example document "hello" and place it at the root of the default workspace, so we can later address it at the path "/hello". The related WebDAV URL is http://localhost:8080/server/default/jcr:root/hello/.

You can use the MKCOL method to create a new node in Jackrabbit. An MKCOL request without a body will create a new empty node, but you can specify the initial contents of the node by including a snippet of JCR system view XML that describes your content. In our case we want to specify the "title" and "date" properties. Note that JCR does not support date-only properties, so we need to store the date value as a more accurate timestamp.

The full request looks like this:

The resulting document is available at the URL we already constructed above, i.e. http://localhost:8080/server/default/jcr:root/hello/.

Pros:

CR2: Read a document

Now that the document is created, we can read it with a standard GET request:

Note that the result includes the standard jcr:primaryType property that is always included in all JCR nodes. Also all namespaces registered in the repository are included even though strictly speaking they add little value to the response.

Pros:

Cons:

CR3: Update a document

The WebDAV feature in Jackrabbit does not support setting multiple properties in a single request, so we need to use separate requests for each property change. The easiest way to update a property is to PUT the new value to the property URL. The only tricky part is that unless the node type explicitly says otherwise the new value is by default stored as a binary stream. You need to specify a custom jcr-value/... content type to override that default.

GETting the document after these changes will give you the updated property values.

Pros:

Cons:

CR4: Delete a document

Deleting a document is easy with the DELETE method:

That's it. Trying to GET the document after it's been deleted gives a 404 response, just as expected.

Pros:

Cons:

To get started I first downloaded the standalone jar file from the Jackrabbit downloads page, and started it with "java -jar jackrabbit-standalone-1.6.0.jar". This is a quick and easy way to get a Jackrabbit repository up and running. Just point your browser to http://localhost:8080/ to check that the repository is there.

Jackrabbit comes with a built-in advanced WebDAV feature that gives you pretty good control over your content. The root URL for the default workspace is http://localhost:8080/server/default/jcr:root/ and by default Jackrabbit grants full write access if you specify any username and password.

Note that Jackrabbit also has another, filesystem-oriented WebDAV feature that you can access at http://localhost:8080/repository/default/. This entry point is great for dealing with simple things like normal files and folders, but for more fine-grained content you'll want to use the advanced WebDAV feature as outlined below.

CR1: Create a document

All documents (nodes) in Jackrabbit have a pathname just like files in a normal file system. Thus to create a new document, we first need to come up with a name and a location for it. Let's call the example document "hello" and place it at the root of the default workspace, so we can later address it at the path "/hello". The related WebDAV URL is http://localhost:8080/server/default/jcr:root/hello/.

You can use the MKCOL method to create a new node in Jackrabbit. An MKCOL request without a body will create a new empty node, but you can specify the initial contents of the node by including a snippet of JCR system view XML that describes your content. In our case we want to specify the "title" and "date" properties. Note that JCR does not support date-only properties, so we need to store the date value as a more accurate timestamp.

The full request looks like this:

$ curl --request MKCOL --data @- --user name:pass \

http://localhost:8080/server/default/jcr:root/hello/ <<END

<sv:node sv:name="hello" xmlns:sv="http://www.jcp.org/jcr/sv/1.0">

<sv:property sv:name="message" sv:type="String">

<sv:value>Hello, World!</sv:value>

</sv:property>

<sv:property sv:name="date" sv:type="Date">

<sv:value>2009-11-17T12:00:00.000Z</sv:value>

</sv:property>

</sv:node>

END

The resulting document is available at the URL we already constructed above, i.e. http://localhost:8080/server/default/jcr:root/hello/.

Pros:

- A single standard WebDAV MKCOL request is enough

- The standard JCR system view XML format is used for the MKCOL body

- The XML format is easy to produce

Cons:

- We need to decide the name and location of the document before it can be created

- The name of the document is duplicated, once in the URL and once in the sv:name attribute

- The date property must be specified down to the millisecond

- While standardized, the MKCOL method is not as well known as PUT or POST

- While standardized, the JCR system view format is not as well known as JSON, Atom or generic XML

- The system view XML format is quite verbose

CR2: Read a document

Now that the document is created, we can read it with a standard GET request:

$ curl --user name:pass http://localhost:8080/server/default/jcr:root/hello/

<?xml version="1.0" encoding="UTF-8"?>

<sv:node sv:name="hello"

xmlns:fn="http://www.w3.org/2005/xpath-functions"

xmlns:fn_old="http://www.w3.org/2004/10/xpath-functions"

xmlns:xs="http://www.w3.org/2001/XMLSchema"

xmlns:jcr="http://www.jcp.org/jcr/1.0"

xmlns:mix="http://www.jcp.org/jcr/mix/1.0"

xmlns:sv="http://www.jcp.org/jcr/sv/1.0"

xmlns:rep="internal"

xmlns:nt="http://www.jcp.org/jcr/nt/1.0">

<sv:property sv:name="jcr:primaryType" sv:type="Name">

<sv:value>nt:unstructured</sv:value>

</sv:property>

<sv:property sv:name="date" sv:type="Date">

<sv:value>2009-11-17T12:00:00.000Z</sv:value>

</sv:property>

<sv:property sv:name="message" sv:type="String">

<sv:value>Hello, World!</sv:value>

</sv:property>

</sv:node>

Note that the result includes the standard jcr:primaryType property that is always included in all JCR nodes. Also all namespaces registered in the repository are included even though strictly speaking they add little value to the response.

Pros:

- A single GET request is enough

- The XML format is easy to consume

Cons:

- The system view format is a bit verbose and generally not that well known

CR3: Update a document

The WebDAV feature in Jackrabbit does not support setting multiple properties in a single request, so we need to use separate requests for each property change. The easiest way to update a property is to PUT the new value to the property URL. The only tricky part is that unless the node type explicitly says otherwise the new value is by default stored as a binary stream. You need to specify a custom jcr-value/... content type to override that default.

$ curl --request PUT --header "Content-Type: jcr-value/date" \

--data "2009-11-18T12:00:00.000Z" --user name:pass \

http://localhost:8080/server/default/jcr:root/hello/date

$ curl --request PUT --header "Content-Type: jcr-value/string" \

--data "Document date updated" --user name:pass \

http://localhost:8080/server/default/jcr:root/hello/history

GETting the document after these changes will give you the updated property values.

Pros:

- Standard PUT requests are used

- No XML or other wrapper format needed, just send the raw value as the request body

Cons:

- More than one request needed

- Need to use non-standard jcr-value/... media types for non-binary values

CR4: Delete a document

Deleting a document is easy with the DELETE method:

$ curl --request DELETE --user name:pass \

http://localhost:8080/server/default/jcr:root/hello/

That's it. Trying to GET the document after it's been deleted gives a 404 response, just as expected.

Pros:

- A standard DELETE request is all that's needed

Cons:

- None.

Sunday, November 22, 2009

Daily Shoot, week 1

A week ago James Duncan Davidson and Mike Clark launched @dailyshoot, a Twitter feed that posts daily photo assignments. The idea is to encourage people who want to learn photography to practice it every day with the help of a simple assignment that fits a single tweet. I'm following Duncan's blog, so I found out about Daily Shoot the day it was launched.

So far I've completed all the assignments and I've already learned quite a bit doing so. It's very interesting to see how other people interpret the same assignments. I avoid looking at other responses before completing an assignment so that I don't end up just copying someone else's approach. Once I'm done I look at what other's have done for some nice insight on what I could have done differently. The process is quite educational.

Here's what I've shot this week:

You can click on the pictures for more background on each assignment and how I approached it. For more information on Daily Shoot, see the recently launched website.

So far I've completed all the assignments and I've already learned quite a bit doing so. It's very interesting to see how other people interpret the same assignments. I avoid looking at other responses before completing an assignment so that I don't end up just copying someone else's approach. Once I'm done I look at what other's have done for some nice insight on what I could have done differently. The process is quite educational.

Here's what I've shot this week:

You can click on the pictures for more background on each assignment and how I approached it. For more information on Daily Shoot, see the recently launched website.

Tuesday, November 17, 2009

Content Repository over HTTP

Two weeks ago during the BarCamp at the ApacheCon US I chaired a short session titled "The RESTful Content Repository". The idea of the session was to discuss the various ways that existing content repositories support RESTful access over HTTP and to perhaps find some common ground from which a generic content repository protocol could be formulated.

The REST architectural style was generally accepted as a useful set of constraints for the architecture of distributed content-based applications, but as an architectural style it doesn't define what the bits on the wire should look like. This is what we set out to define with the HTTP protocol as a baseline. We didn't get too far, but see below for some collected thoughts and a useful set of "test cases" that I hope to use to further investigate this idea.

Existing solutions

Many existing content repositories and related products already support one or more HTTP-based access patterns: Apache Jackrabbit exposes two slightly different WebDAV-based access points. Apache Sling adds the SlingPostServlet and default JSON and XML renderings of content. Apache CouchDB uses JSON over HTTP as the primary access protocol. Apache Solr uses XML over HTTP. Midgard doesn't have a built-in HTTP binding for content, but makes it very easy to implement such bindings. This list just scratches the surface...

There are even existing generic protocols that match at least parts of what we wanted to achieve. WebDAV has been around for ten years already, but the way it extends HTTP with extra methods makes it harder to use with existing HTTP clients and libraries. The AtomPub protocol solves that issue, but being based on the Atom format and leaving much of the server behaviour undefined, AtomPub may not be the best solution for generic content repositories.

Content repository operations over HTTP

To better understand the needs and capabilities of existing solutions, we should come up with a simple set of content operations and find out if and how different systems support those operations over HTTP. The most basic such set of operations is CRUD, i.e. how to create, read, update, and delete a document, so let's start with that. I'm giving each operation a key (CRn, as in "Content Repository operation N") and a brief description of what's expected. In later posts I hope to explore how these operations can be implemented with curl or some other simple HTTP client accessing various kinds of content repositories. I'm also planning to extend the set of required operations to cover features like search, linking, versioning, transactions, etc.

CR1: Create a document

Documents with simple properties like strings and dates are basic building blocks of all content applications. How can I create a new document with the following properties?

At the end of this operation I should have a URL that I can use to access the created document.

CR2: Read a document

Given the URL of a document (see CR1), how do I read the properties of that document?

The retrieved property values should match the values given when the document was created.

CR3: Update a document

Given the URL of a document (see CR1), how do update the properties of that document? For example, I want to update the existing date property and add a new string property:

When the document is read (see CR2) after this update, the retrieved information should contain the original title and the above updated date and history values.

CR4: Delete a document

Given the URL of a document (see CR1), how do I delete that document?

Once deleted, it should no longer be possible to read (see CR2) or update (see CR3) the document.

The REST architectural style was generally accepted as a useful set of constraints for the architecture of distributed content-based applications, but as an architectural style it doesn't define what the bits on the wire should look like. This is what we set out to define with the HTTP protocol as a baseline. We didn't get too far, but see below for some collected thoughts and a useful set of "test cases" that I hope to use to further investigate this idea.

Existing solutions

Many existing content repositories and related products already support one or more HTTP-based access patterns: Apache Jackrabbit exposes two slightly different WebDAV-based access points. Apache Sling adds the SlingPostServlet and default JSON and XML renderings of content. Apache CouchDB uses JSON over HTTP as the primary access protocol. Apache Solr uses XML over HTTP. Midgard doesn't have a built-in HTTP binding for content, but makes it very easy to implement such bindings. This list just scratches the surface...

There are even existing generic protocols that match at least parts of what we wanted to achieve. WebDAV has been around for ten years already, but the way it extends HTTP with extra methods makes it harder to use with existing HTTP clients and libraries. The AtomPub protocol solves that issue, but being based on the Atom format and leaving much of the server behaviour undefined, AtomPub may not be the best solution for generic content repositories.

Content repository operations over HTTP

To better understand the needs and capabilities of existing solutions, we should come up with a simple set of content operations and find out if and how different systems support those operations over HTTP. The most basic such set of operations is CRUD, i.e. how to create, read, update, and delete a document, so let's start with that. I'm giving each operation a key (CRn, as in "Content Repository operation N") and a brief description of what's expected. In later posts I hope to explore how these operations can be implemented with curl or some other simple HTTP client accessing various kinds of content repositories. I'm also planning to extend the set of required operations to cover features like search, linking, versioning, transactions, etc.

CR1: Create a document

Documents with simple properties like strings and dates are basic building blocks of all content applications. How can I create a new document with the following properties?

- title = "Hello, World!" (string)

- date = 2009-11-17 (date)

At the end of this operation I should have a URL that I can use to access the created document.

CR2: Read a document

Given the URL of a document (see CR1), how do I read the properties of that document?

The retrieved property values should match the values given when the document was created.

CR3: Update a document

Given the URL of a document (see CR1), how do update the properties of that document? For example, I want to update the existing date property and add a new string property:

- date = 2009-11-18 (date)

- history = "Document date updated" (string)

When the document is read (see CR2) after this update, the retrieved information should contain the original title and the above updated date and history values.

CR4: Delete a document

Given the URL of a document (see CR1), how do I delete that document?

Once deleted, it should no longer be possible to read (see CR2) or update (see CR3) the document.

Tuesday, October 27, 2009

NoSQL interests

Note that the data is biased towards Apache projects due to the meetup being organized at ApacheCon US 2009.

Projects

The following open source projects were mentioned. The list is in alphabetical order, as the data set is too small to make any reasonable ordering by popularity.

- Cassandra

- CouchDB

- Hadoop

- HBase

- HDFS

- Jackrabbit

- Lucene

- Mahout

- memcached

- MongoDB

- Redis

- Riak

- Scalaris

- Sling

- Tokyo Cabinet

- Voldemort

Topics

Many responses were about the "big data" aspect of the NoSQL movement. Some frequent keywords: distributed storage, large transactional data, consistency, failover, availability, reliability, stability, failure detection, failed node replacement, (petabyte) scalability, consistency levels, storage technology, performance, benchmarks, optimization, backup and recovery, map/reduce

Another common theme were the various database types and the NoSQL "development model". Keywods: document stores, key/value stores, consistent hashing, graph databases, object databases, persistent queues, content modeling, migration from the relational model, social graphs, streaming, software as a service, offline applications, full text search, natural language processing

Beyond the above big themes, I found it interesting that the following technologies were specifically named: Erlang, Java, WebSimpleDB, WebDAV

In addition to specific topics, many people were asking for case studies or "lessons learned" -type presentations.

Friday, October 16, 2009

Putting POI on a diet

The Apache POI team is doing an amazing job at making Microsoft Office file formats more accessible to the open source Java world. One of the projects that benefits from their work is Apache Tika that uses POI to extract text content and metadata from all sorts of Office documents.

However, there's one problem with POI that I'd like to see fixed: It's too big.

More specifically, the ooxml-schemas jar used by POI for the pre-generated XMLBeans bindings for the Office Open XML schemas is taking up over 50% of the 25MB size of the current Tika application. The pie chart below illustrates the relative sizes of the different parser library dependencies of Tika:

Both PDF and the Microsoft Office formats are pretty big and complex, so one can expect the relevant parser libraries to be large. But the 14MB size of the ooxml-schemas jar seems excessive, especially since the standard OOXML schema package from which the ooxml-schemas jar is built is only 220KB in size.

Does anyone have good ideas on how to best trim down this OOXML dependency?

However, there's one problem with POI that I'd like to see fixed: It's too big.

More specifically, the ooxml-schemas jar used by POI for the pre-generated XMLBeans bindings for the Office Open XML schemas is taking up over 50% of the 25MB size of the current Tika application. The pie chart below illustrates the relative sizes of the different parser library dependencies of Tika:

Both PDF and the Microsoft Office formats are pretty big and complex, so one can expect the relevant parser libraries to be large. But the 14MB size of the ooxml-schemas jar seems excessive, especially since the standard OOXML schema package from which the ooxml-schemas jar is built is only 220KB in size.

Does anyone have good ideas on how to best trim down this OOXML dependency?

Wednesday, September 23, 2009

Some graphics work for a change

I've recently spent some effort in improving the look of the Apache Jackrabbit website. I'm no designer, so the results aren't that great, but it's been a nice break from the regular project work. And I got to brush up my Photoshop and Gimp skills.

One part of the effort was creating an icon for the site. Previously the site used the feather icon used as the default on all Apache project sites, but I wanted a Jackrabbit-specific icon that helps me to quickly identify and access Jackrabbit pages among the numerous tabs I usually have open in my browser. The work is a good example of incremental improvements in action:

I started with a copy of the Jackrabbit logo with nice alpha-layered transparent background. It looked great until I noticed that some browsers lost the smooth alpha layer and instead resulted in a rather badly aliased icon seen above.

The straightforward solution was to add a white background as can be seen in step 2. That worked already pretty well in all browsers.

After a few days of watching the icon I found it a bit too blocky to my taste, so I tried to restore some of the nice transparency effect by rounding the corners a bit. I'm pretty happy with the result.

Of course, if you have design talent and think you can do better, go for it!

One part of the effort was creating an icon for the site. Previously the site used the feather icon used as the default on all Apache project sites, but I wanted a Jackrabbit-specific icon that helps me to quickly identify and access Jackrabbit pages among the numerous tabs I usually have open in my browser. The work is a good example of incremental improvements in action:

I started with a copy of the Jackrabbit logo with nice alpha-layered transparent background. It looked great until I noticed that some browsers lost the smooth alpha layer and instead resulted in a rather badly aliased icon seen above.

The straightforward solution was to add a white background as can be seen in step 2. That worked already pretty well in all browsers.

After a few days of watching the icon I found it a bit too blocky to my taste, so I tried to restore some of the nice transparency effect by rounding the corners a bit. I'm pretty happy with the result.

Of course, if you have design talent and think you can do better, go for it!

Saturday, September 19, 2009

Release time

There's lots of upcoming release activity at the Apache projects I'm more or less involved with:

I'm hoping to see most of these releases happening in time for the ApacheCon US 2009 conference in early November.

The incubating Apache PDFBox project is just about to release the eagerly anticipated 0.8.0 release. I'm expecting to see the release announcement on Tuesday next week. PDFBox is a Java library for working with PDF documents.

The incubating Apache PDFBox project is just about to release the eagerly anticipated 0.8.0 release. I'm expecting to see the release announcement on Tuesday next week. PDFBox is a Java library for working with PDF documents. Another incubating project, Apache UIMA, is working towards the 2.3.0 release. I'm looking forward to seeing both UIMA and PDFBox graduating from the Apache Incubator shortly after the respective releases. UIMA is a framework and a set of components for analyzing large volumes of unstructured information.

Another incubating project, Apache UIMA, is working towards the 2.3.0 release. I'm looking forward to seeing both UIMA and PDFBox graduating from the Apache Incubator shortly after the respective releases. UIMA is a framework and a set of components for analyzing large volumes of unstructured information. The Apache Sling project is a component-based project like Apache Felix, so there is no clear project-wide release cycle. Instead Sling is about to start releasing new versions of most of the components changed since the all-inclusive incubator releases. Sling is a JCR-based web framework.

The Apache Sling project is a component-based project like Apache Felix, so there is no clear project-wide release cycle. Instead Sling is about to start releasing new versions of most of the components changed since the all-inclusive incubator releases. Sling is a JCR-based web framework. Apache Tika uses PDFBox for extracting text content from PDF documents. I'm hoping to see a Tika 0.5 release soon with the latest PDFBox dependency and the design improvements I've been working on. Tika is a toolkit for extracting text and metadata from all kinds of documents.

Apache Tika uses PDFBox for extracting text content from PDF documents. I'm hoping to see a Tika 0.5 release soon with the latest PDFBox dependency and the design improvements I've been working on. Tika is a toolkit for extracting text and metadata from all kinds of documents. Apache Solr is about to enter code freeze in preparation for the 1.4 release that will include the "Solar Cell" feature based on Tika. Solr is a search server based on Lucene.

Apache Solr is about to enter code freeze in preparation for the 1.4 release that will include the "Solar Cell" feature based on Tika. Solr is a search server based on Lucene.- The Commons IO project has been upgraded to use Java 5 features and I'm starting to push it towards a 2.0 release. Commons IO is a library of Java IO utilities.

Lucene Java is gearing up for the 2.9 release, and will soon follow up with the 3.0 release. The trie range feature is an especially welcome addition for many use cases. Lucene is a feature-rich high performance search engine.

Lucene Java is gearing up for the 2.9 release, and will soon follow up with the 3.0 release. The trie range feature is an especially welcome addition for many use cases. Lucene is a feature-rich high performance search engine. And last but not least, Apache Jackrabbit is getting ready to release the 2.0 version based on the recently approved JCR 2.0 standard. Jackrabbit is a feature-complete JCR content repository implementation.

And last but not least, Apache Jackrabbit is getting ready to release the 2.0 version based on the recently approved JCR 2.0 standard. Jackrabbit is a feature-complete JCR content repository implementation.

I'm hoping to see most of these releases happening in time for the ApacheCon US 2009 conference in early November.

Tuesday, August 11, 2009

Apache Jackrabbit 1.6.0 released

The Apache Jackrabbit project has just released Jackrabbit version 1.6.0. This release will most likely be the latest JCR 1.0 -based Jackrabbit 1.x minor release before the upcoming Jackrabbit 2.0 and the upgrade to JCR version 2.0. The purpose goal of this release is to push out as many of the recent Jackrabbit trunk improvements as possible so that the number of new things in Jackrabbit 2.0 remains manageable.

The most notable changes and new features in this release are:

This release is the result of contributions from quite a few people. Thanks to everyone involved, this is open source in action!

The most notable changes and new features in this release are:

- The RepositoryCopier tool makes it easy to backup and migrate repositories (JCR-442). There is also improved support for selectively copying content and version histories between repositories (JCR-1972).

- A new WebDAV-based JCR remoting layer has been added to complement the existing JCR-RMI layer (JCR-1877, JCR-1958).

- Query performance has been further optimized (JCR-1820, JCR-1855 and JCR-2025).

- Added support for Ingres and MaxDB/SapDB databases (JCR-1960, JCR-1527).

- Session.refresh() can now be used to synchronize a cluster node with changes from the other nodes in the cluster (JCR-1753).

- Unreferenced version histories are now automatically removed once all the contained versions have been removed (JCR-134).

- Standalone components like the JCR-RMI layer and the OCM framework have been moved to a separate JCR Commons subproject of Jackrabbit, and are not included in this release. Updates to those components will be distributed as separate releases.

- Development preview: There are even more JSR 283 features in Jackrabbit 1.6 than were included in the 1.5 version. These new features are accessible through special "jsr283" interfaces in the Jackrabbit API. Note that none of these features are ready for production use, and will be replaced with final JCR 2.0 versions in Jackrabbit 2.0.

This release is the result of contributions from quite a few people. Thanks to everyone involved, this is open source in action!

Saturday, July 18, 2009

JCR 2.0 implementation progress

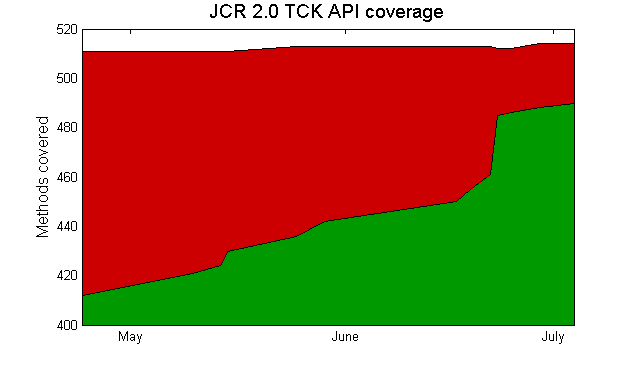

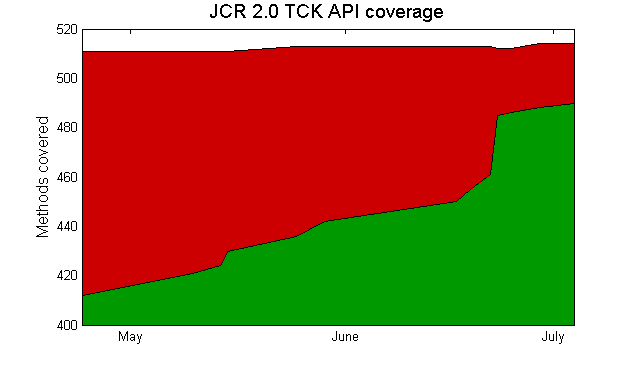

The JCR 2.0 API specified by JSR 283 has been in Proposed Final Draft (PFD) stage since March, and Apache Jackrabbit developers have been busy implementing all the specified new features and adding compliance test cases for them.

Both the Reference Implementation (RI) and the Technology Compatibility Kit (TCK) of JSR 283 will be based on Jackrabbit code, and we expect the final version of the specification to be released shortly after Jackrabbit trunk becomes feature-complete and the API coverage of the TCK reaches 100%. The following two graphs illustrate our progress on both these fronts.

First a track of all the JCR 2.0 implementation tasks we've filed under the JCR-1104 collection issue. The amount of work per each sub-task is not uniform, so this graph only shows the general trend and does not suggest any specific completion date.

The second graph tracks the TCK API coverage. We started with the JCR 1.0 TCK, so the first 300-400 method signatures were already covered with few changes to existing test code. Based on Julian's API coverage reports in JSR-2085, this graph tracks progress in covering the 100+ new method signatures introduced in JCR 2.0. Again, the graph is meant to show just a general trend and should not be used to extrapolate future progress.

Wan't to see JCR 2.0 in action? The latest Jackrabbit 2.0 alpha releases are available for download!

Both the Reference Implementation (RI) and the Technology Compatibility Kit (TCK) of JSR 283 will be based on Jackrabbit code, and we expect the final version of the specification to be released shortly after Jackrabbit trunk becomes feature-complete and the API coverage of the TCK reaches 100%. The following two graphs illustrate our progress on both these fronts.

First a track of all the JCR 2.0 implementation tasks we've filed under the JCR-1104 collection issue. The amount of work per each sub-task is not uniform, so this graph only shows the general trend and does not suggest any specific completion date.

The second graph tracks the TCK API coverage. We started with the JCR 1.0 TCK, so the first 300-400 method signatures were already covered with few changes to existing test code. Based on Julian's API coverage reports in JSR-2085, this graph tracks progress in covering the 100+ new method signatures introduced in JCR 2.0. Again, the graph is meant to show just a general trend and should not be used to extrapolate future progress.

Wan't to see JCR 2.0 in action? The latest Jackrabbit 2.0 alpha releases are available for download!

Wednesday, June 3, 2009

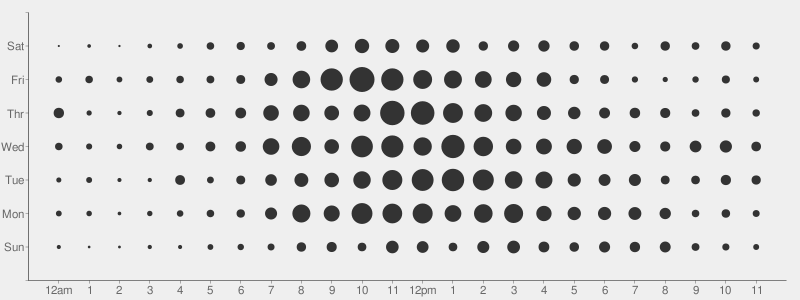

Commits per weekday and hour

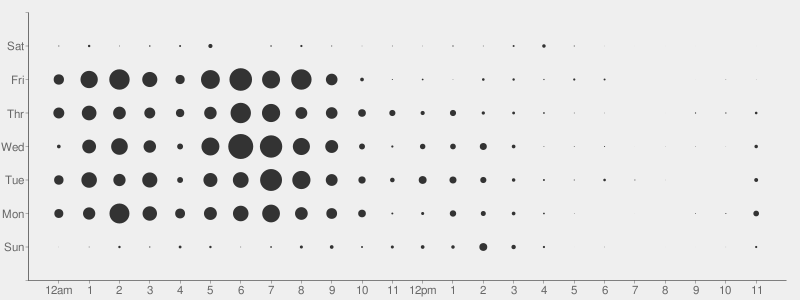

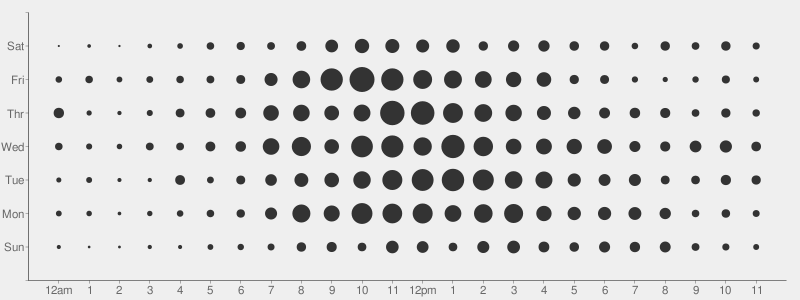

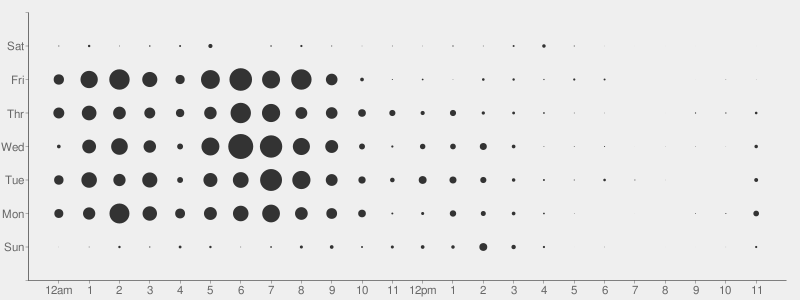

The punchcard graphs at Github are a nice way to quickly detect the rough geographical distribution (or nighttime coding habits) of the key contributors of an open source project. Here's a few selected examples from the ASF.

Apache HTTP Server

Apache Maven (core)

Apache Jackrabbit

Apache HTTP Server

Apache Maven (core)

Apache Jackrabbit

Sunday, May 17, 2009

Would you trust a pirate?

Apparently they're now setting up a Pirate Party also in Finland. I guess it's good to have a political force that questions the appropriateness of traditional copyright in the digital world. However, as a knowledge worker I'm not that excited about drastic changes in the protection of immaterial rights.

Anyway, my appreciation for the movement in Finland went down considerably when I saw their spokesman in the news today. When asked about the main goals of the new party he only mentioned freedom of speech and protection of privacy. Did he just forget the massive overhaul of copyright and patent laws that they're primarily after?

Anyway, my appreciation for the movement in Finland went down considerably when I saw their spokesman in the news today. When asked about the main goals of the new party he only mentioned freedom of speech and protection of privacy. Did he just forget the massive overhaul of copyright and patent laws that they're primarily after?

Saturday, May 9, 2009

Midgard: Where it all began

On Friday we celebrated the tenth anniversary of the Midgard project. The celebration took the form of a very nice gala evening with good food and drinks with live music, show and of course some speeches. I was asked to deliver a few words about how it all began for Midgard.

Here's my speech, reconstructed from my draft notes and edited for the web audience:

We were a group of teenagers and young adults doing historical re-enactment and live action role playing games. One evening in early -97 we were sitting in a bus, returning from the woods with all our viking gear on. Bergie said to me: "Hey Yaro", as I was known as Yaroslav at the time. "Hey Yaro", he said, "you're over 18 and you have a drivers license. Would you like to take a dozen teenagers to a trip to Norway and back?" Even back then Bergie was the one with big dreams and the power to inspire people. I had the skills required to make those dreams happen but not yet enough experience to tell that we perhaps should think twice. So I just answered: "Sounds cool, let's do it!" That's pretty much what happened also with Midgard.

The trip to Norway went well for us and was followed by a number of other adventures. One of them was our quest to build a better web site for our group. It was -97 and the web was booming. The de facto web publishing technology was FTP, that people used to push static HTML to a web server. Geocities was a major cool thing as it allowed you to publish your static HTML for free. We however had bigger plans and our own server running in the closet of a friendly internet company. And we were publishing lots of stuff: news, photos, articles, etc. Quite a few people were actively contributing new content to the web site.

Our first serious attempt at better managing the site was based on technologies called SGML and DSSSL. For the technically minded: nowadays you'd use XML and XSLT for similar tasks. We used this system to "cook" our content into nicely formatted HTML that was then served to the world. It worked pretty well, but was hopelessly too complex for almost all of our contributors. This was a time when people were only just discovering the Internet. Most of our contributors were teenagers who were using the net from libraries or schools. Internet connections with modems were only just finding their ways to normal households. Even FTP was often out of the question, so there was little hope of making the heavy SGML tooling work as well as we'd like.

We wanted a system that could be managed entirely through the browser. Not just the content you saw on the web site, but the layout templates and even the functional code used to list pages or to handle the forms for adding or modifying content. The system should allow you to build an entire web site, including all the administration interfaces, without any other tooling than a web browser. Such systems simply didn't exist at the time and in fact they're pretty rare even today.

So we had to build our own system. We looked at a number of potential platforms for something like this, and the LAMP stack seemed like a good fit. Our server already ran Linux and, like pretty much everyone, we used the Apache web server. We hadn't used PHP or MySQL before, but they were getting some good press and were easy enough to get started with. In fact we hadn't done much anything when we started: we hadn't done Apache modules, we hadn't extended (or even written!) PHP, and at the time I had only read about relational databases. As we used to say: "How hard can it be?" We didn't know, and so we just did it.

The result of our efforts was called Midgard. We had used it to power our web site for about a year when Bergie was hired to build a new web site for a Finnish tech company. Midgard seemed like a good fit for that need, and we figured that also other people might find the system useful. Open source was cool and we wanted to join the movement so we decided to publish Midgard as open source. After nights spent researching licensing options, writing press releases, creating the project web site and setting up mailing lists and public CVS access we were finally ready to publish Midgard 1.0 to the world. That happened exactly ten years ago.

The 1.0 release was like the Land Rover it was named for. The magnificent car from -62, that we used on many of our trips, was really cool and when it worked, it did so very well. However every known and then it required some "manual help" to get it started or to keep it going. This was also the case for Midgard 1.0. The first external installation that I know of was done on a Solaris platform and required a few days worth of help and patches delivered over the mailing list before it was up and running. Much of that early feedback and experience was reflected in Midgard 1.1 that was our first release that people were actually managing to install and run without direct assistance. That started the growth of the Midgard community.

Meanwhile I had also been hired by the same company where Bergie worked, and much of our work there resulted in improvements to Midgard. Together with the feedback and early contributions we were getting from the mailing lists this made Midgard 1.2 already a pretty solid piece of software. It was fairly straightforward to install (at the standards of the time), it performed well and it had most of the functionality that you'd need to run a moderately complex web site.

And the results were showing. We were getting increasing traffic on the mailing lists, some companies would start offering Midgard support and the number of Midgard-based sites around the world was growing. One of my earliest concrete rewards for doing open source was a bottle of quality whiskey that some Midgard user from Germany sent me with a note saying: "Thanks for Midgard!" The whiskey is long gone, but I still treasure the memory. A few years later Bergie and a few other friends and Midgard developers went on to start their own company based on Midgard. I was tempted to join them, but at the time my life was taking a different route and I gradually left Midgard to pursue other things.

Seeing the Midgard project take off and build a life of its own has been a very inspiring process for me. Having your first open source project become so successful is pretty amazing and also quite humbling. Looking at all the things Midgard is today fills me with pride of not what I've done, but of what you, the Midgard community, have accomplished. Thank you for that. Especially I'd like to thank my long time friend and co-conspirator in starting the Midgard project. Bergie, without your dreams and refusal to take "no" as an answer we wouldn't be here today. Thank you.

Here's my speech, reconstructed from my draft notes and edited for the web audience:

We were a group of teenagers and young adults doing historical re-enactment and live action role playing games. One evening in early -97 we were sitting in a bus, returning from the woods with all our viking gear on. Bergie said to me: "Hey Yaro", as I was known as Yaroslav at the time. "Hey Yaro", he said, "you're over 18 and you have a drivers license. Would you like to take a dozen teenagers to a trip to Norway and back?" Even back then Bergie was the one with big dreams and the power to inspire people. I had the skills required to make those dreams happen but not yet enough experience to tell that we perhaps should think twice. So I just answered: "Sounds cool, let's do it!" That's pretty much what happened also with Midgard.

The trip to Norway went well for us and was followed by a number of other adventures. One of them was our quest to build a better web site for our group. It was -97 and the web was booming. The de facto web publishing technology was FTP, that people used to push static HTML to a web server. Geocities was a major cool thing as it allowed you to publish your static HTML for free. We however had bigger plans and our own server running in the closet of a friendly internet company. And we were publishing lots of stuff: news, photos, articles, etc. Quite a few people were actively contributing new content to the web site.

Our first serious attempt at better managing the site was based on technologies called SGML and DSSSL. For the technically minded: nowadays you'd use XML and XSLT for similar tasks. We used this system to "cook" our content into nicely formatted HTML that was then served to the world. It worked pretty well, but was hopelessly too complex for almost all of our contributors. This was a time when people were only just discovering the Internet. Most of our contributors were teenagers who were using the net from libraries or schools. Internet connections with modems were only just finding their ways to normal households. Even FTP was often out of the question, so there was little hope of making the heavy SGML tooling work as well as we'd like.

We wanted a system that could be managed entirely through the browser. Not just the content you saw on the web site, but the layout templates and even the functional code used to list pages or to handle the forms for adding or modifying content. The system should allow you to build an entire web site, including all the administration interfaces, without any other tooling than a web browser. Such systems simply didn't exist at the time and in fact they're pretty rare even today.

So we had to build our own system. We looked at a number of potential platforms for something like this, and the LAMP stack seemed like a good fit. Our server already ran Linux and, like pretty much everyone, we used the Apache web server. We hadn't used PHP or MySQL before, but they were getting some good press and were easy enough to get started with. In fact we hadn't done much anything when we started: we hadn't done Apache modules, we hadn't extended (or even written!) PHP, and at the time I had only read about relational databases. As we used to say: "How hard can it be?" We didn't know, and so we just did it.

The result of our efforts was called Midgard. We had used it to power our web site for about a year when Bergie was hired to build a new web site for a Finnish tech company. Midgard seemed like a good fit for that need, and we figured that also other people might find the system useful. Open source was cool and we wanted to join the movement so we decided to publish Midgard as open source. After nights spent researching licensing options, writing press releases, creating the project web site and setting up mailing lists and public CVS access we were finally ready to publish Midgard 1.0 to the world. That happened exactly ten years ago.

The 1.0 release was like the Land Rover it was named for. The magnificent car from -62, that we used on many of our trips, was really cool and when it worked, it did so very well. However every known and then it required some "manual help" to get it started or to keep it going. This was also the case for Midgard 1.0. The first external installation that I know of was done on a Solaris platform and required a few days worth of help and patches delivered over the mailing list before it was up and running. Much of that early feedback and experience was reflected in Midgard 1.1 that was our first release that people were actually managing to install and run without direct assistance. That started the growth of the Midgard community.

Meanwhile I had also been hired by the same company where Bergie worked, and much of our work there resulted in improvements to Midgard. Together with the feedback and early contributions we were getting from the mailing lists this made Midgard 1.2 already a pretty solid piece of software. It was fairly straightforward to install (at the standards of the time), it performed well and it had most of the functionality that you'd need to run a moderately complex web site.

And the results were showing. We were getting increasing traffic on the mailing lists, some companies would start offering Midgard support and the number of Midgard-based sites around the world was growing. One of my earliest concrete rewards for doing open source was a bottle of quality whiskey that some Midgard user from Germany sent me with a note saying: "Thanks for Midgard!" The whiskey is long gone, but I still treasure the memory. A few years later Bergie and a few other friends and Midgard developers went on to start their own company based on Midgard. I was tempted to join them, but at the time my life was taking a different route and I gradually left Midgard to pursue other things.

Seeing the Midgard project take off and build a life of its own has been a very inspiring process for me. Having your first open source project become so successful is pretty amazing and also quite humbling. Looking at all the things Midgard is today fills me with pride of not what I've done, but of what you, the Midgard community, have accomplished. Thank you for that. Especially I'd like to thank my long time friend and co-conspirator in starting the Midgard project. Bergie, without your dreams and refusal to take "no" as an answer we wouldn't be here today. Thank you.

Monday, April 27, 2009

Content Technology at the ApacheCon US 2009

I'm putting together a plan for a Content Technology track at the ApacheCon US 2009 in Oakland later this year. The original plan for the track was focused on JCR and related stuff, but there's some interest in expanding the scope to cover a wider range of things related to content management and web publishing.

The track proposal has been discussed on the Jackrabbit and Sling mailing lists, and people from POI and Lenya have chimed in with interest. I also contacted Wicket, Cocoon, JSPWiki and Roller about their interest, and the initial feedback seems good. Any other projects I should be contacting?

I'm not sure how this works for the conference planners, who are probably facing some real deadlines in terms of fixing the conference schedule and contacting selected speakers. Let's see how it all plays out.

Update: Added JSPWiki and Roller.

The track proposal has been discussed on the Jackrabbit and Sling mailing lists, and people from POI and Lenya have chimed in with interest. I also contacted Wicket, Cocoon, JSPWiki and Roller about their interest, and the initial feedback seems good. Any other projects I should be contacting?

I'm not sure how this works for the conference planners, who are probably facing some real deadlines in terms of fixing the conference schedule and contacting selected speakers. Let's see how it all plays out.

Update: Added JSPWiki and Roller.

Saturday, April 4, 2009

One month, five languages

The past month was probably the first time in about 20 years when the number of natural languages I used was greater than the number of programming languages I wrote code in. I've never thought of myself as much of a language person, but here I am actively using five different languages! Here's a list of the languages in order of my fluency.

Finnish

Of course. I was in Finland twice in the past month and every other day or so I spend a lot of time on Skype talking Finnish with Kikka. I read Finnish news every day, and keep in contact with my Finnish friends mostly through various Internet channels.

Of course. I was in Finland twice in the past month and every other day or so I spend a lot of time on Skype talking Finnish with Kikka. I read Finnish news every day, and keep in contact with my Finnish friends mostly through various Internet channels.

My main concern with my Finnish is that nowadays I don't do much serious writing in Finnish. Of course I write letters, postcards and email to friends and family, but that's about it. I used to be a fairly good writer (grammatically, etc., not so much artistically), but now I think my skills are rapidly eroding.

English

English is currently the language I use most actively. I speak it daily at work and elsewhere. I read and write piles of email in English every day. All the code and documentation that I read and write is in English, just like the various tech and world affairs sites and blogs I follow.

English is currently the language I use most actively. I speak it daily at work and elsewhere. I read and write piles of email in English every day. All the code and documentation that I read and write is in English, just like the various tech and world affairs sites and blogs I follow.

Even though I understand English well and can get myself understood with little trouble, I still don't think I'm particularly good with the language. As they say: The universal language of the world is not English; the truly universal language is bad English. The last time I actually studied English was in high school 15 years ago, so I believe I would really benefit from taking some more advanced courses on the finer points of the language.

Swedish

Learning Swedish is mandatory in Finland, so I spent ten years studying the language at school. Thus I have a reasonably strong theoretical background in the language, but since I very rarely use it anywhere my practical skills aren't that great. Prodded by Kikka to do something about that, I recently bought and started reading Conn Iggulden's book Stäppens Krigare (Wolf of the Plains) in Swedish. The first 20 or so pages were a struggle, but then it all came back to me and now I'm going strong at around page 200 and can barely set the book aside.

Learning Swedish is mandatory in Finland, so I spent ten years studying the language at school. Thus I have a reasonably strong theoretical background in the language, but since I very rarely use it anywhere my practical skills aren't that great. Prodded by Kikka to do something about that, I recently bought and started reading Conn Iggulden's book Stäppens Krigare (Wolf of the Plains) in Swedish. The first 20 or so pages were a struggle, but then it all came back to me and now I'm going strong at around page 200 and can barely set the book aside.

The funny thing about the Swedish I've learned is that it's not really what they speak in Sweden, but rather a dialect spoken only by a small Swedish-speaking minority in Finland. I have a feeling that I'm going to end up with something similar, just on a larger scale, also for German...

French

I've never been too enthusiastic about learning languages, so in high school I dropped French (that I had studied for two years earlier) in favor of more math and physics. I did some more French courses at the university to fill up the mandatory language studies, but I've never really mastered the language. However, I have relatives in France and Morocco, so I do have a "live" connection to the language that I've lately tried to keep up through occasional visits.

I've never been too enthusiastic about learning languages, so in high school I dropped French (that I had studied for two years earlier) in favor of more math and physics. I did some more French courses at the university to fill up the mandatory language studies, but I've never really mastered the language. However, I have relatives in France and Morocco, so I do have a "live" connection to the language that I've lately tried to keep up through occasional visits.

My latest visit was a few weeks ago when I took the TGV train from Basel for a quick weekend visit to Paris. During the visit I tried to speak as much French as I could, and was able to keep up reasonably well when people around me were speaking French.

German

Last but not least. I started actively learning German when I moved to to Switzerland about half a year ago. First I used an online course, and after finishing it I've now been taking an evening course with a real teacher and a group of seven students. It's hard work, especially since the Swiss German I hear around me every day is quite different from the Standard German I'm learning at the course.

Last but not least. I started actively learning German when I moved to to Switzerland about half a year ago. First I used an online course, and after finishing it I've now been taking an evening course with a real teacher and a group of seven students. It's hard work, especially since the Swiss German I hear around me every day is quite different from the Standard German I'm learning at the course.

I can increasingly well manage simple shopping and restaurant interactions in German, and I try to read (or at least browse) the local newspapers every day. I've also started using the German Wikipedia as my first source of any non-technical trivia. I go there a few times a week and only switch to the English counterpart when I can't figure out some specific details.

I guess my studies are starting to take effect, as my first germanism already found it's way to a tweet I posted yesterday. Earlier this week I also had my first dream in German! In my dream I continued doing the German exercises that I had been doing when I fell asleep...

What's missing?

All the languages I'm using are (originally) European. I'd really love a chance to brush up my Japanese (I studied it for a while at the university) or learn the basics of Mandarin (and Arabic would be cool too), but I guess that for the next few years I'll be too busy getting up to speed with German to even consider doing something new.

Finnish

Of course. I was in Finland twice in the past month and every other day or so I spend a lot of time on Skype talking Finnish with Kikka. I read Finnish news every day, and keep in contact with my Finnish friends mostly through various Internet channels.

Of course. I was in Finland twice in the past month and every other day or so I spend a lot of time on Skype talking Finnish with Kikka. I read Finnish news every day, and keep in contact with my Finnish friends mostly through various Internet channels.My main concern with my Finnish is that nowadays I don't do much serious writing in Finnish. Of course I write letters, postcards and email to friends and family, but that's about it. I used to be a fairly good writer (grammatically, etc., not so much artistically), but now I think my skills are rapidly eroding.

English

English is currently the language I use most actively. I speak it daily at work and elsewhere. I read and write piles of email in English every day. All the code and documentation that I read and write is in English, just like the various tech and world affairs sites and blogs I follow.

English is currently the language I use most actively. I speak it daily at work and elsewhere. I read and write piles of email in English every day. All the code and documentation that I read and write is in English, just like the various tech and world affairs sites and blogs I follow.Even though I understand English well and can get myself understood with little trouble, I still don't think I'm particularly good with the language. As they say: The universal language of the world is not English; the truly universal language is bad English. The last time I actually studied English was in high school 15 years ago, so I believe I would really benefit from taking some more advanced courses on the finer points of the language.

Swedish

Learning Swedish is mandatory in Finland, so I spent ten years studying the language at school. Thus I have a reasonably strong theoretical background in the language, but since I very rarely use it anywhere my practical skills aren't that great. Prodded by Kikka to do something about that, I recently bought and started reading Conn Iggulden's book Stäppens Krigare (Wolf of the Plains) in Swedish. The first 20 or so pages were a struggle, but then it all came back to me and now I'm going strong at around page 200 and can barely set the book aside.

Learning Swedish is mandatory in Finland, so I spent ten years studying the language at school. Thus I have a reasonably strong theoretical background in the language, but since I very rarely use it anywhere my practical skills aren't that great. Prodded by Kikka to do something about that, I recently bought and started reading Conn Iggulden's book Stäppens Krigare (Wolf of the Plains) in Swedish. The first 20 or so pages were a struggle, but then it all came back to me and now I'm going strong at around page 200 and can barely set the book aside.The funny thing about the Swedish I've learned is that it's not really what they speak in Sweden, but rather a dialect spoken only by a small Swedish-speaking minority in Finland. I have a feeling that I'm going to end up with something similar, just on a larger scale, also for German...

French

I've never been too enthusiastic about learning languages, so in high school I dropped French (that I had studied for two years earlier) in favor of more math and physics. I did some more French courses at the university to fill up the mandatory language studies, but I've never really mastered the language. However, I have relatives in France and Morocco, so I do have a "live" connection to the language that I've lately tried to keep up through occasional visits.

I've never been too enthusiastic about learning languages, so in high school I dropped French (that I had studied for two years earlier) in favor of more math and physics. I did some more French courses at the university to fill up the mandatory language studies, but I've never really mastered the language. However, I have relatives in France and Morocco, so I do have a "live" connection to the language that I've lately tried to keep up through occasional visits.My latest visit was a few weeks ago when I took the TGV train from Basel for a quick weekend visit to Paris. During the visit I tried to speak as much French as I could, and was able to keep up reasonably well when people around me were speaking French.

German

Last but not least. I started actively learning German when I moved to to Switzerland about half a year ago. First I used an online course, and after finishing it I've now been taking an evening course with a real teacher and a group of seven students. It's hard work, especially since the Swiss German I hear around me every day is quite different from the Standard German I'm learning at the course.

Last but not least. I started actively learning German when I moved to to Switzerland about half a year ago. First I used an online course, and after finishing it I've now been taking an evening course with a real teacher and a group of seven students. It's hard work, especially since the Swiss German I hear around me every day is quite different from the Standard German I'm learning at the course.I can increasingly well manage simple shopping and restaurant interactions in German, and I try to read (or at least browse) the local newspapers every day. I've also started using the German Wikipedia as my first source of any non-technical trivia. I go there a few times a week and only switch to the English counterpart when I can't figure out some specific details.

I guess my studies are starting to take effect, as my first germanism already found it's way to a tweet I posted yesterday. Earlier this week I also had my first dream in German! In my dream I continued doing the German exercises that I had been doing when I fell asleep...

What's missing?

All the languages I'm using are (originally) European. I'd really love a chance to brush up my Japanese (I studied it for a while at the university) or learn the basics of Mandarin (and Arabic would be cool too), but I guess that for the next few years I'll be too busy getting up to speed with German to even consider doing something new.

Thursday, March 26, 2009

Maven meetup report

A few days late, here's a quick report on what I managed to do this Monday here at the ApacheCon EU. As mentioned earlier, I arrived at the conference hotel on Monday evening and headed straight for the Maven meetup.

Maven meetup

The meetup was already in progress when I arrived, but I managed to catch a part of a presentation about the Eclipse integration that just keeps getting better. Nowadays it's so easy to import and manage Maven projects in Eclipse, that I get really annoyed every time I need to do manually set things up for projects with Ant builds.

Other interesting topics covered were Maven archetypes and the release plugin. I've for a long time been thinking about doing some archetypes to help setting up new JCR client applications. We should probably also do something similar for setting up new Sling bundles.

The release plugin demo was interesting, though I'm not so sure if I agree with all the conventions and assumptions that the plugin makes. On a related note, we should configure the GPG plugin for the Maven build in Jackrabbit.

We talked a bit about Maven 2.1.0 and the upcoming 3.0 release. I'm already pretty happy with the recent Maven 2.0.x releases, so we'll probably take a while before upgrading, but it's good to hear that things are progressing on multiple fronts. We also briefly touched on the differences between the Maven and OSGi dependency models and the ways to better bridge the two worlds.

In summary the meetup was really interesting and served well in giving me a better idea of what's up in the Maven land. Thanks for everyone involved!

Chops, ribs and beer

After the meetup a few of us headed out to Amsterdam city center for some food and drinks. Monday evening wasn't perhaps the best time to go out as we needed to wander around looking for places that would be open long enough. Anyway, we found some "interesting" places to visit before returning to the hotel in the early hours. Good times.

Maven meetup

The meetup was already in progress when I arrived, but I managed to catch a part of a presentation about the Eclipse integration that just keeps getting better. Nowadays it's so easy to import and manage Maven projects in Eclipse, that I get really annoyed every time I need to do manually set things up for projects with Ant builds.

Other interesting topics covered were Maven archetypes and the release plugin. I've for a long time been thinking about doing some archetypes to help setting up new JCR client applications. We should probably also do something similar for setting up new Sling bundles.

The release plugin demo was interesting, though I'm not so sure if I agree with all the conventions and assumptions that the plugin makes. On a related note, we should configure the GPG plugin for the Maven build in Jackrabbit.

We talked a bit about Maven 2.1.0 and the upcoming 3.0 release. I'm already pretty happy with the recent Maven 2.0.x releases, so we'll probably take a while before upgrading, but it's good to hear that things are progressing on multiple fronts. We also briefly touched on the differences between the Maven and OSGi dependency models and the ways to better bridge the two worlds.

In summary the meetup was really interesting and served well in giving me a better idea of what's up in the Maven land. Thanks for everyone involved!

Chops, ribs and beer

After the meetup a few of us headed out to Amsterdam city center for some food and drinks. Monday evening wasn't perhaps the best time to go out as we needed to wander around looking for places that would be open long enough. Anyway, we found some "interesting" places to visit before returning to the hotel in the early hours. Good times.

Monday, March 23, 2009

ApacheCon plans

It's ApacheCon time again. I'll be flying to Amsterdam later today, and will probably be pretty busy for the entire week. Some highlights:

Monday

Tuesday

Wednesday

And lots of other stuff, too much to keep track of...

Monday

- Maven meetup. I'll probably arrive at the conference hotel just in time for the Maven meetup, where I'm hoping to catch up with the latest news from the Maven land.

Tuesday

- Git hacking. During the Hackathon on Tuesday I hope to get together with Grzegorz and anyone else interested in setting up git.apache.org.

- Commons Compress. There's some useful code in the Commons Compress component that I hope to use in Apache Tika. If I have time during the Hackathon I want to help push the component towards its first release.

- CMIS / Chemistry update. I've been meaning to check out the CMIS code that Florent Guillaume has been working on recently. I'd love to get the effort better integrated into Jackrabbit.

- Commons XML. I've been gathering some JAXP utility code to a new XML library in the Commons sandbox. I hope to spend some time pushing more code there and perhaps discussing the concept with some interested people.

- Juuso lab. I have lots of new ideas about RDF processing and Prolog. Hoping to turn those into working code.

- Lucene meetup. Catching up with the latest in Lucene and telling people about Tika and the Lucene integration we have in Jackrabbit. Unfortunately I only have one hour to spend here before the JCR meetup starts.

- JCR meetup. Starting at 8pm, the JCR meetup is one of the key highlights of the conference for me. We'll be covering stuff related to the Jackrabbit and Sling projects. You're welcome to join us (sign up here) if you're interested in the latest news from the content repository world.

Wednesday

- Content Storage with Apache Jackrabbit. I'll be presenting at 16:30.

- Rapid JCR applications development with Sling. Bertrand's presentation at 17:30.

- JCR with Apache Jackrabbit and Apache Sling. BOF session at 21:30.

And lots of other stuff, too much to keep track of...

Saturday, February 14, 2009

When all you have is a hammer

Helsingin Sanomat, a newspaper in Finland, has an article (in Finnish) where some experts give advice on what Helsinki should do to succeed among the metropolises of the world. I'm paraphrasing:

Who's got the big picture?

- Humanist: "Multiculturalism"

- Environmentalist: "Save energy"

- Architect: "More buildings"

Who's got the big picture?

First flowers of the year

After recovering from the flu, I went walking around in Basel and found a nice park about 1.5 kilometers from where I live. It's been a bit rainy lately in here, but the sun is already pretty warm when it peeks from behind the clouds.

[caption id="" align="aligncenter" width="240" caption="Flowers in a sunny spot"] [/caption]

[/caption]

There was this sunny spot beside a tree where all these small yellow flowers were pushing up from the ground. I just had to take a closer look.

[caption id="" align="aligncenter" width="160" caption="Closeup of the flowers"] [/caption]

[/caption]

I took some other pictures as well. It was a nice afternoon.

[caption id="" align="aligncenter" width="240" caption="Flowers in a sunny spot"]

[/caption]

[/caption]There was this sunny spot beside a tree where all these small yellow flowers were pushing up from the ground. I just had to take a closer look.

[caption id="" align="aligncenter" width="160" caption="Closeup of the flowers"]

[/caption]

[/caption]I took some other pictures as well. It was a nice afternoon.

Monday, February 9, 2009

Comparing Midgard and JCR